Can facial analysis technology create a child-safe internet? | Identity cards

[ad_1]

Suppose you pulled out your phone this morning to post a pic to your favourite social network – let’s call it Twinstabooktok – and were asked for a selfie before you could log on. The picture you submitted wouldn’t be sent anywhere, the service assured you: instead, it would use state-of-the-art machine-learning techniques to work out your age. In all likelihood, once you’ve submitted the scan, you can continue on your merry way. If the service guessed wrong, you could appeal, though that might take a bit longer.

The upside of all of this? The social network would be able to know that you were an adult user and provide you with an experience largely free of parental controls and paternalist moderation, while children who tried to sign up would be given a restricted version of the same experience.

Depending on your position, that might sound like a long-overdue corrective to the wild west tech sector, or a hopelessly restrictive attempt to achieve an impossible end: a child-safe internet. Either way, it’s far closer to reality than many realise.

In China, gamers who want to log on to play mobile games after 10pm must prove their age, or get turfed off, as the state tries to tackle gaming addiction. “We will conduct a face screening for accounts registered with real names and which have played for a certain period of time at night,” Chinese gaming firm Tencent said last Tuesday. “Anyone who refuses or fails the face verification will be treated as a minor, as outlined in the anti-addiction supervision of Tencent’s game health system, and kicked offline.” Now, the same approach may be coming to the UK, where a series of government measures are about to come into force in rapid succession, potentially changing the internet for ever.

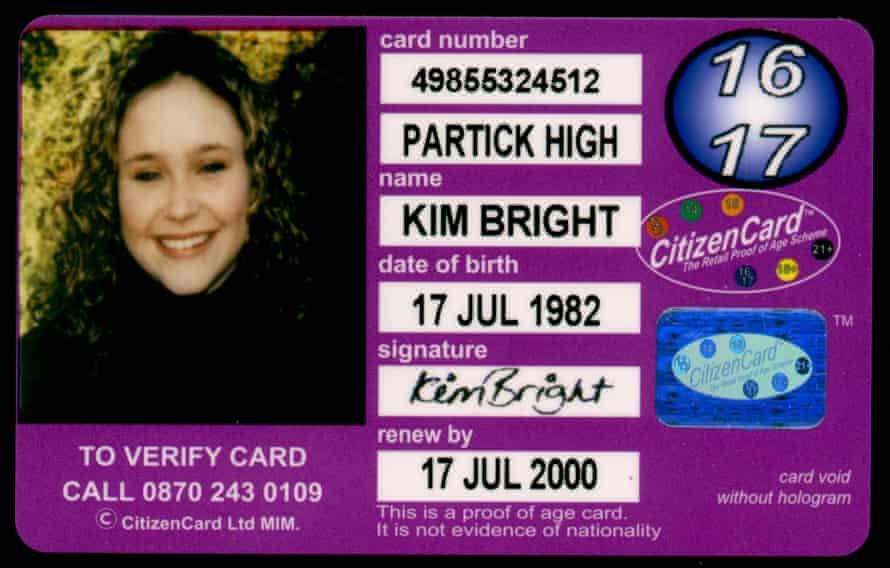

The fundamental problem with verifying the age of an internet user is obvious enough: if, on the internet, nobody knows you’re a dog, then they certainly don’t know you’re a 17-year-old. In the offline world, we have two main approaches to age verification. The first is some form of official ID. In the UK, that’s often a driving licence, while for children it may be any one of a few private-sector ID cards, such as CitizenCard or MyID Card. Those, in turn, are backed by a rigorous chain of proof-of-identity, usually leading back to a birth certificate – the final proof of age. But just as important for the day-to-day functioning of society is the other approach: looking at people. There’s no need for an ID card system to stop seven-year-olds sneaking into an 18-rated movie– it’s so obvious that it doesn’t even feel like age verification.

But proving your age with ID, it turns out, is a very different thing online to off, says Alec Muffett, an independent security researcher and former Open Rights Group director: “Identity is a concept that is broadly misunderstood, especially online. Because ‘identity’ actually means ‘relationship’. We love to think in terms of identity meaning ‘credential’, such as ‘passport’ or ‘driving licence’, but even in those circumstances we are actually talking about ‘bearer of passport’ and ‘British passport’ – both relationships – with the associated booklet acting as a hard-to-forge ‘pivot’ between the two relationships.” In other words: even in the offline world, a proof of age isn’t simply a piece of paper that says “I am over 18”; it’s more like an entry in a complex nexus that says: “The issuer of this card has verified that the person pictured on the card is over 18 by checking with a relevant authority.”

Online, if you simply replicate the surface level of offline ID checks – flashing a card to someone who checks the date on it – you break that link between the relationships. It’s no good proving you hold a valid driving licence, for instance, if you can’t also prove that you are the name on the licence. But if you do agree to that, then the site you are visiting will have a cast-iron record of who you are, when you visited, and what you did while you where there.

So in practice, age verification can become ID verification, which can in turn become, Muffett warns, “subjugated to cross-check and revocation from a cartel of third parties… all gleefully rubbing their hands together at the monetisation opportunities”.

Those fears have scuppered more than just attempts to build online proof-of-age systems. From the Blair-era defeat of national ID cards onwards, the British people have been wary of anything that seems like a national database. Begin tracking people in a centralised system, they fear, and it’s the first step on an inexorable decline towards a surveillance state. But as the weight of legislation piles up, it seems inevitable that something will change soon.

The Digital Economy Act (2017) was mostly a tidying-up piece of legislation, making tweaks to a number of issues raised since the passage of the much more wide-ranging 2010 act of the same name. But one provision, part three of the act, was an attempt to do something that had never been done before, and introduce a requirement for online age verification.

The act was comparatively narrow in scope, applying only to commercial pornographic websites, but it required them to ensure that their users were over 18. The law didn’t specify how they were to do that, instead preferring to turn the task of finding an acceptable solution over to the private sector. Proposals were dutifully suggested, from a “porn pass”, which users could buy in person from a newsagent and enter into the site at a later date, through algorithmic attempts to leverage credit card data and existing credit check services to do it automatically (with a less than stunning success rate). Sites that were found to be providing commercial pornography to under-18s would be fined up to 5% of their turnover, and the BBFC was named as the expected regulator, drawing up the detailed regulations.

And then… nothing happened. The scheme was supposed to begin in 2018 but didn’t. In 2019, a rumoured spring onset was missed, but the government did, two years after passage of the bill, set a date: July that year. But just days before the regulation was supposed to take effect, the government said it had failed to give notification to the European Commission and delayed the scheme further, “in the region of six months”. Then suddenly, in October 2019, as that deadline was again approaching, the scheme was killed for good.

The news saddened campaigners, such as Vanessa Morse, chief executive of Cease, the Centre to End All Sexual Exploitation. “It’s staggering that pornography sites do not yet have age verification,” she says. “The UK has an opportunity to be a leader in this. But because it’s prevaricated and kicked into the long grass, a lot of other countries have taken it over already.”

Morse argues that the lack of age-gating on the internet is causing serious harm. “The online commercial pornography industry is woefully unregulated. It’s had several decades to explode in terms of growth, and it’s barely been regulated at all. As a result, pornography sites do not distinguish between children and adult users. They are not neutral and they are not naive: They know that there are 1.4 million children visiting pornography sites every month in the UK.

“And 44% of boys aged between 11 and 16, who regularly view porn, said it gave them ideas about the type of sex they wanted to try. We know that the children’s consumption of online porn has been associated with a dramatic increase in child-on-child sexual abuse over the past few years. Child-on-child sexual abuse now constitutes about a third of all child sexual abuse. It’s huge.”

Despite protestations from Cease and others, the government shows no signs of resurrecting the porn block. Instead, its child-protection efforts have splintered across an array of different initiatives. The online harms bill, a Theresa May-era piece of legislation, was revived by the Johnson administration and finally presented in draft form in May: it calls for social media platforms to take action against “legal but harmful” content, such as that which promotes self-harm or suicide, and imposes requirements on them to protect children from inappropriate content.

Elsewhere, the government has given non-binding “advice” to communications services on how to “improve the safety of your online platform”: “You can also prevent end-to-end encryption for child accounts,” the advice reads in part, because it “makes it more difficult for you to identify illegal and harmful content occurring on private channels.” Widely interpreted as part of a larger government push to get WhatsApp to turn off its end-to-end encryption – long a bane of law enforcement, which resents the inability to easily intercept communications – the advice pushes for companies to recognise their child users and treat them differently.

Most immediate, however, is the Age Appropriate Design code. Introduced in the Data Protection Act 2018, which implemented GDPR in the UK, the code sees the information commissioner’s office laying out a new standard for internet companies that are “likely to be accessed by children”. When it comes into force in September this year, the code will be comprehensive, covering everything from requirements for parental controls to restrictions on data collection and bans on “nudging” children to turn off privacy protections, but the key word is “likely”: in practice, some fear, it draws the net wide enough that the whole internet will be required to declare itself “child friendly” – or to prove that it has blocked children.

The NSPCC is strongly in support of the code. “Social networks should use age-assurance technology to recognise child users and in turn ensure they are not served up inappropriate content by algorithms and are given greater protections, such as the most stringent privacy settings,” says Alison Trew, senior child safety online policy officer at the NSPCC. “This technology must be flexible and adaptable to the varied platforms used by young people – now and to new sites in the future – so better safeguards for children’s rights to privacy and safety can be built in alongside privacy protections for all users.”

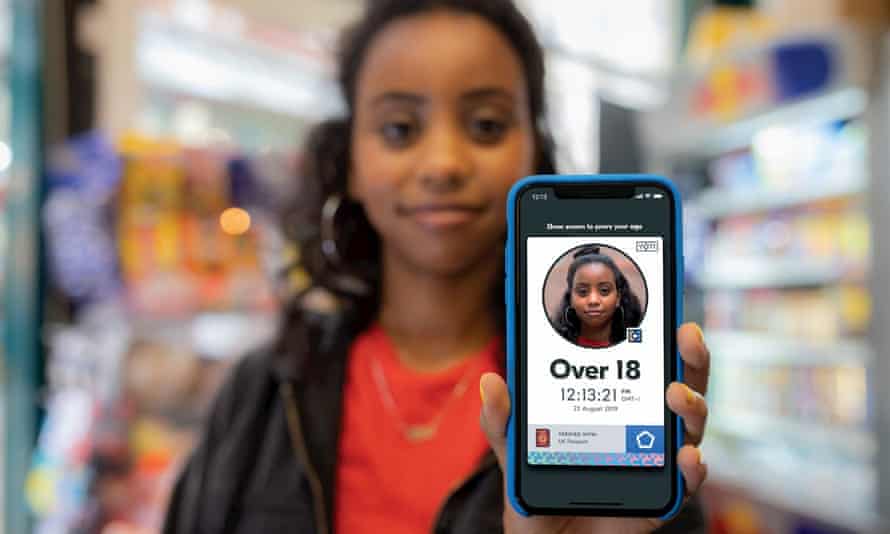

Which brings us back to the start, and the social media service asking for a selfie at account creation. Because the code’s requirements are less rigorous than the porn block, providers are free to innovate a bit more. Take Yoti, for instance: the company provides a range of age verification services, partnering with CitizenCard to offer a digital version of its ID, and working with self-service supermarkets to experiment with automatic age recognition of individuals. John Abbott, Yoti’s chief business officer, says the system is already as good as a person at telling someone’s age from a video of them, and has been tested against a wide range of demographics – including age, race and gender – to ensure that it’s not wildly miscategorising any particular group. The company’s most recent report claims that a “Challenge 21” policy (blocking under-18s by asking for strong proof of age from people who look under 21) would catch 98% of 17-year-olds, and 99.15% of 16 year olds, for instance.

“It’s facial analysis, not facial recognition,” Abbott’s colleague Julie Dawson, director of regulatory and policy, adds. “It’s not recognising my face one-to-one, all it’s trying to work out is my age.” That system, the company thinks, could be deployed at scale almost overnight, and for companies that simply need to prove that they aren’t “likely” to be accessed by children, it could be a compelling offer.

It’s not, of course, something that would trouble a smart 14-year-old – or even just a regular 14-year-old with a phone and an older sibling willing to stand in for the selfie – but perhaps a bit of friction is better than none.